The technology behind the retinal scanner is dependent on the individuality of each human's retina. Fortunately, even identical twins have different retinas. More specifically, it is the capillaries (tiny blood vessels) in the retina that set one structure apart from another. These capillaries have a different density than the tissue that surrounds them, which means that an infrared beam shined directly at the retina will be soaked in more by some regions than others. By setting up a lens to read the reflection of the infrared beam, the scanner can take in a map of the user's capillaries. They can then overlay this reading with the reading on file, and due to the fixed nature of the retina, find a match.

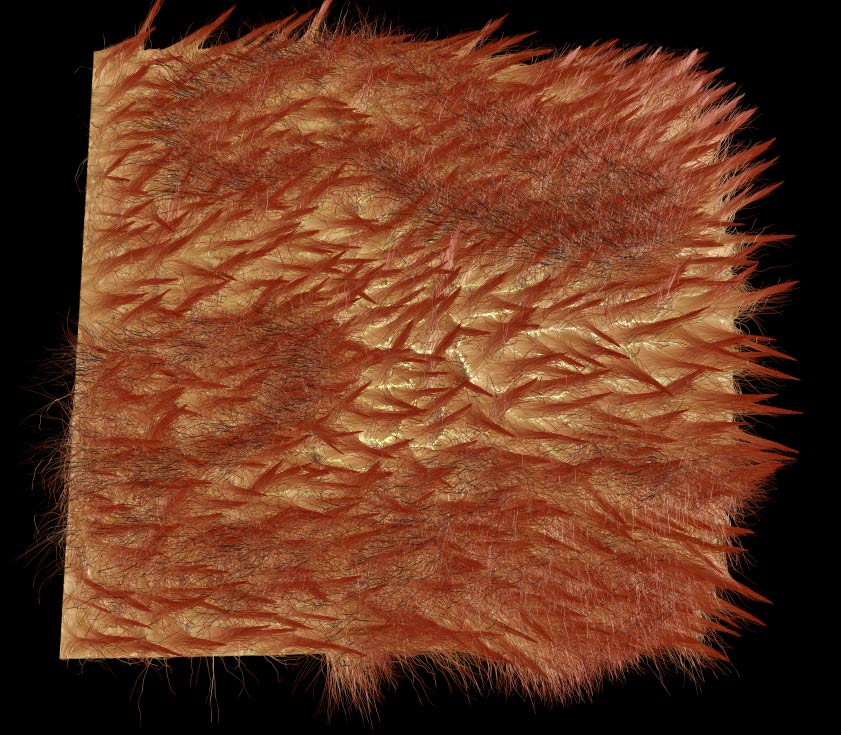

A map of retinal capillaries

Errors can occur with retinal scanners when considering medical issues. Diabetes, glaucoma, and other diseases can cause the pattern of capillaries within the retina to be altered, therefore rendering an initial reading of the retina useless. Another downside of retinal scanners is, as you might imagine, a very high cost. Even as the technology begins to spread, the price remains steep. However, the fact that retinal scanners are a reality outside of just the movie theater is an exciting step towards us all living a sci fi reality.

https://en.wikipedia.org/wiki/Retinal_scan

http://www.armedrobots.com/new-retinal-scanners-can-find-you-in-a-crowd-in-dim-light

http://www.oculist.net/others/ebook/generalophthal/server-java/arknoid/amed/vaughan/co_chapters/ch015/ch015_print_01.html